Sunday, October 6, 2013

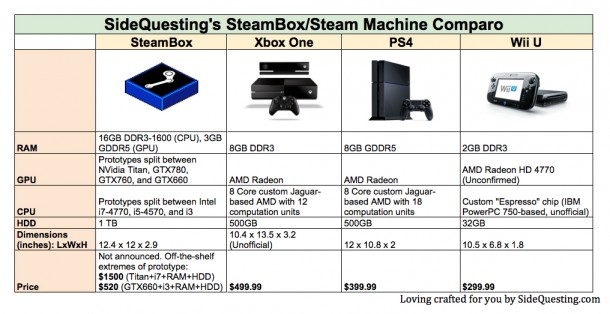

Rumored SteamBox Specs

Looks like it will kill the next gen consoles in terms of performance, even while turned off.

Thursday, September 26, 2013

AMD's new API - Codenamed: Mantle. It Supposedly Makes Everything Obsolete.

Mantle and Graphics Core Next: Simplifying Cross-Platform Game Development

The award-winning Graphics Core Next (GCN) architecture in the AMD Radeon R9 and R7 Series graphics cards continues to serve as a driving force behind the Unified Gaming Strategy, AMD's approach to providing a consistent gaming experience on the PC, in the living room or over the cloud -- all powered by AMD Radeon graphics found in AMD graphics cards and accelerated processing units (APUs). The four pillars of the Unified Gaming Strategy -- console, cloud, content and client -- come together with the introduction of Mantle.

With Mantle, games like DICE's "Battlefield 4" will be empowered with the ability to speak the native language of the Graphics Core Next architecture, presenting a deeper level of hardware optimization no other graphics card manufacturer can match. Mantle also assists game developers in bringing games to life on multiple platforms by leveraging the commonalities between GCN-powered PCs and consoles for a simple game development process.

With the introduction of Mantle, AMD solidifies its position as the leading provider of fast and efficient game development platforms. Mantle will be detailed further at the AMD Developer Summit, APU13, taking place Nov. 11-13 in San Jose, Calif.

Source: http://www.techpowerup.com/191453/amd-gpu14-event-detailed-announces-radeon-r9-290x.html

AMD claims Mantle enables nine times more draw calls per second than other APIs, which is a huge increase in performance.

Source: http://www.techspot.com/news/54134-amd-launches-mantle-api-to-optimize-pc-gpu-performance.html

AMD claims Mantle enables nine times more draw calls per second than other APIs, which is a huge increase in performance.

Source: http://www.techspot.com/news/54134-amd-launches-mantle-api-to-optimize-pc-gpu-performance.html

SteamBox / Steam Machine / SteamOS

Valve is finally doing what we have all been hoping. Skynet... err... I mean a new console / open PC format / and an operating system.

SteamOS will be based off of Linux. Many many fascinating details behind Valve strategy can be found at the source.

Source: http://store.steampowered.com/livingroom/SteamMachines/

SteamOS will be based off of Linux. Many many fascinating details behind Valve strategy can be found at the source.

Source: http://store.steampowered.com/livingroom/SteamMachines/

AMD Radeon R9 290X

AMD announced the new Radeon R9 290X, its next-generation flagship graphics card. Based on the second-generation Graphics CoreNext micro-architecture, the card is designed to outperform everything NVIDIA has at the moment, including a hypothetical GK110-based graphics card with 2,880 CUDA cores. It's based on the new "Hawaii" silicon, with four independent tessellation units, close to 2,800 stream processors, and 4 GB of memory. The card supports DirectX 11.2, and could offer an inherent performance advantage over NVIDIA's GPUs at games such as "Battlefield 4". Battlefield 4 will also be included in an exclusive preorder bundle. The card will be competitively priced against NVIDIA's offerings. We're awaiting more details.

AMD announced the R9 series for enthusiasts and gamers ; R7 for price-conscious buyers

AMD lists out a new product stack

R7 250 and R7 260 under $150, R9 270X under $200, R9 280X $299, and R9 290X

R9 290X Battlefield 4 Edition

"Three pillars of the R9 series: GCN 2.0, 4K, TrueAudio, etc

- DirectX 11.2 and improved energy efficiency

- >5 TFLOP/s compute power

- >300 GB/s memory bandwidth

- >4 billion trig/sec

- >6 billion transistors

Source: http://www.techpowerup.com/191453/amd-gpu14-event-detailed-announces-radeon-r9-290x.html

AMD lists out a new product stack

R7 250 and R7 260 under $150, R9 270X under $200, R9 280X $299, and R9 290X

R9 290X Battlefield 4 Edition

"Three pillars of the R9 series: GCN 2.0, 4K, TrueAudio, etc

- DirectX 11.2 and improved energy efficiency

- >5 TFLOP/s compute power

- >300 GB/s memory bandwidth

- >4 billion trig/sec

- >6 billion transistors

Source: http://www.techpowerup.com/191453/amd-gpu14-event-detailed-announces-radeon-r9-290x.html

Wednesday, September 11, 2013

STEAM/Valve Announce Family Sharing Plan!

September 11, 2013 – Steam Family Sharing, a new service feature that allows close friends and family members to share their libraries of Steam games, is coming to Steam, a leading platform for the delivery and management of PC, Mac, and Linux games and software. The feature will become available next week, in limited beta on Steam.A bit of good news on a sad day!

Source: http://store.steampowered.com/news/11436/

Thursday, September 5, 2013

AMD Catalyst 13.10 Beta Driver Released (Supports Windows 8.1)

Feature Highlights of The AMD Catalyst 13.10 Beta Driver for Windows:

AMD Catalyst 13.10 Beta includes all of the features/fixes found in AMD Catalyst 13.8 Beta2

PCI-E bus speed is no longer set to x1 on the secondary GPU when running in CrossFire configurations

Rome Total War 2: Updated AMD CrossFire™ profile

PCI-E bus speed is no longer set to x1 on the secondary GPU when running in CrossFire configurations

Rome Total War 2: Updated AMD CrossFire™ profile

- improves CrossFire scaling up to 20% (at 2560x1600 with extreme settings)

- resolves corruption issues seen while playing the game

- improves CrossFire scaling up to 20% (at 2560x1600 with ultra settings)

- improves CrossFire scaling up to 10% (at 2560x1600 with ultra settings)

Includes a number of frame pacing improvements for the following titles:

- Tomb Raider

- Metro Last Light

- Sniper Elite

- World of Warcraft

- Max Payne 3

- Hitman Absolution

Labels:

13,

13.10 Beta,

AMD,

Beta,

Catalyst Driver,

Microstutter,

Windows 8.1

Wednesday, September 4, 2013

Xbox One CPU Speed Boosted

A few sources have discovered that Microsoft has boosted the Xbox One's CPU by 150 MHz, making it now 1.75GHz. This in addition to the GPU boosted earlier last month from 800 MHz to 853 MHz. While this is good for consumers, every little bit helps, but from a professional stand point I see one of two things:

1.) They were rushed in planning the console.

2.) There is a noticeable discrepancy in performance compared to the rival (PS4) and this is an attempt to bridge the gap.

In all likelihood, it could be both, there unrealistic and unwanted policies being changed is a sign of disconnect with the community or potentially haphazard ideas thrown together hastily. One thing is for sure, we don't know the actual speeds of the PS4's hardware, but from the start in the GPU is approximately 50% more powerful at rendering than the Xbox One. Realistically we will see this show up at 10~30% boost in frame rates at equal settings, possibly more as developers get more and more used to the hardware in question.

Source: http://www.ign.com/articles/2013/09/04/xbox-one-is-now-in-full-production-with-an-improved-cpu

1.) They were rushed in planning the console.

2.) There is a noticeable discrepancy in performance compared to the rival (PS4) and this is an attempt to bridge the gap.

In all likelihood, it could be both, there unrealistic and unwanted policies being changed is a sign of disconnect with the community or potentially haphazard ideas thrown together hastily. One thing is for sure, we don't know the actual speeds of the PS4's hardware, but from the start in the GPU is approximately 50% more powerful at rendering than the Xbox One. Realistically we will see this show up at 10~30% boost in frame rates at equal settings, possibly more as developers get more and more used to the hardware in question.

Source: http://www.ign.com/articles/2013/09/04/xbox-one-is-now-in-full-production-with-an-improved-cpu

PS4 looks to be using Virtual Reality!

Sony is said to announce official VR plans at the Tokyo Games Show 2013. Not only is it being rumored to be based off of Oculus Rift tech, but they already have a prototype of DriveClub working with it.

Very nice on the heals of the Xbox One supposedly trumping the PS4 by supporting 8 controllers, meaning 8 simultaneous players. I personally don't care but I guess some party goers will enjoy 8 players on the same screen.

Source: http://www.extremetech.com/gaming/165609-sonys-next-xbox-killing-move-developing-a-ps4-virtual-reality-headset

Very nice on the heals of the Xbox One supposedly trumping the PS4 by supporting 8 controllers, meaning 8 simultaneous players. I personally don't care but I guess some party goers will enjoy 8 players on the same screen.

Source: http://www.extremetech.com/gaming/165609-sonys-next-xbox-killing-move-developing-a-ps4-virtual-reality-headset

Hynix has had a major fire!

Prepare for major price increases on OEMs and RAM.

Source: http://www.chiphell.com/forum.php?mod=viewthread&tid=850052

Hynix said the fire raged for more than an hour. After an initial assessment, the world's No. 2 maker of dynamic random access memory (DRAM) chips said it found no "material" damage to fabrication gear in its clean room at the plant, which produces around 12 to 15 percent of global computer memory chips.Reuters: http://www.reuters.com/article/2013/09/04/china-hynix-suspension-idUSL4N0H03CJ20130904

Source: http://www.chiphell.com/forum.php?mod=viewthread&tid=850052

Xbox One - Q&A about Features

Will digitally downloaded games be able to be pre-loaded before release, so that they're simply unlocked and ready to go at 12:01am on the day of release?

MARC WHITTEN, CHIEF XBOX ONE PLATFORM ARCHITECT: Not at launch, but you’ll see us do this and much more over the life of the program.

If I sign in to Xbox Live on my Xbox One, will I also be able to sign in on the same account on my 360 at the same time? Say someone is using the Xbox One in the living room and I'm playing my 360 in my bedroom. Will I be able to use the same account?

WHITTEN: Yes this works! You’ll be able to be signed on with the same gamertag on both an Xbox 360 and an Xbox One console at the same time. However, like today, you can only be signed into one Xbox 360 and one Xbox One at a time.

Given that Rep (Reputation) will now factor into Xbox One's online matchmaking (whereas it didn't before on the Xbox 360), will everyone's Rep be reset to provide an initial playing field? Since there are new rules, shouldn't everyone be able to demonstrate themselves as good players right from the start? Or will players be penalized for past mistakes? In other words, will previous account infractions carry over to Xbox One?

WHITTEN: We will not carry over any of the 360 reputation scores into the new Xbox One reputation system. The majority of members will start fresh at the “Good” player level.

We will be working with the Xbox Live enforcement team that has identified a small subset of members that have recently had enforcement actions taken against them and set those members reputation to an initial “Needs Works” level. This will give those members a chance to prove they can participate on Live fairly, and are not automatically placed in the “Avoid Me” classification where things like SmartMatch filtering will affect them.

Will Xbox One work as a Windows Media Center Extender? For those that use a PC with TV tuner to record and watch TV, we use the 360 as an extender to stream this content to TVs. Will this continue with Xbox One?

WHITTEN: Xbox One isn’t a native Media Center Extender. We’ll continue to work to enable more ways for everyone to get the television they want over the life of the program.Much much more at the source. Some of these are puzzling.

Source: http://www.ign.com/articles/2013/08/05/ask-microsoft-anything-about-xbox-one

Xbox One Launching November 22nd (after PS4)

This is it: the battle for the next generation console market is on.Microsoft has finally revealed the launch date for its Xbox One machine – it will hit retail in 13 major territories including North America and the UK on 22 November.Source: http://www.theguardian.com/technology/2013/sep/04/xbox-one-launch-22-november

Xbox One is launch in the US before PS4 but is actually beating Sony to the UK by arriving 7 days early.

HDMI 2.0 Officially Announced, Supposedly Supports 4K @ 60FPS

With a bandwidth capacity of up to 18Gbps, it has enough room to carry 3,840 x 2,160 resolution video at up to 60fps. It also has support for up to 32 audio channels, "dynamic auto lipsync" and additional CEC extensions. The connector itself is unchanged, which is good for backwards compatibility but may disappoint anyone hoping for something sturdier

Maximum TMDS Throughput Per Channel: 6 Gbit/s (including overhead) / Was 3.4

Maximum total TMDS throughput: 18 Gbit/s (including overhead 8b/10b) / Was 10.2

Maximum actual Throughput: 14.4 Gbit/s (overhead removed) / was 8.16

Maximum audio: 36.86 Mbit/s / same

Maximum Color Depth: 48bit per pixel, / same

8b/10b overhead is from encoding the signal and ECC. Seems like a very steep overhead to me.

Good news though, it does support 2560x1600 @ 109 FPS, perhaps slightly less.

I am also not quite convinced of 60 FPS. @ 32bpp, it is only 54 FPS. 24bpp is 72 FPS.

One interesting thing while going through the Engadget comments was running into this gentleman:

@BuyudunBebegim 18 Gbps is apparently enough to fit 4K 120Hz.

4K 120Hz, using 4:2:2 chroma, is only 15.9 Gbps, though reduced blanking intervals would add at least 0.5 Gbps to the total. There's no technical reason why 4K 120Hz can't be achieved over HDMI 2.0, as long as you're willing to accept 4:2:2 chroma instead of 4:4:4 chroma. People are already successfully doing 1080p@120Hz over HDMI 1.3a (A few new models of HDTV's can accept true 120Hz now; no interpolation -- there's a list on the Net now.) and the HDMI clockrate isn't even being overclocked at all.

There's currently an initiative in a HardForum thread (SEIKI 4K thread, begining around approximately page 15) to come up with a 120Hz modification of an existing 4K panel, with some thousand-dollar pledges. We've discovered the panel is 120Hz capable, but the TCON is not; and we are trying to figure out if electronics parts on the market exists to bypass the TCON limitation... It would take probably several LVDS channels to pull this off, though. Some people are quite determined to see 4K 120Hz happen. We were looking at DisplayPort channels as the method, but HDMI 2.0 could actually make this easier.

Mark Rejhon (Owner of Blur Busters)

I was quick to chime in though the problems with overhead:

You are forgetting about 8b/10b overhead for ECC and encoding. You start with a maximum throughput of 14.4Gbit/s, unless you find a way to bypass ECC. As the imagesize gets larger, the more than likely ECC has to be used and it is proportional in bandwidth to the bandwidth of the transmission.

Or in other words, the more bandwidth you add, the more you need in encoding/ECC.

You could in theory with 4.2.2 get about 105 FPS, going off of your 15.9 Gbit/s

I wasn't quite sure where he got the 15.9 Gbit/s but the ratio of bandwidths of 15.9/14.4 in determining the lower frame rate supported being about (120 / (15.9/14.4)). I then rounded down about 4 FPS.

Thursday, August 29, 2013

AMD to Start Shipping Next-Generation Graphics Processors in October

While it is really well overdue for some new hardware from team RED, it seems that they may actually start believing it themselves. A lot of rumors have been going round as of late that the Hawaii Island chips maybe out the end of the year.

According Xbit, they will be coming as early as October!

Source: http://www.xbitlabs.com/news/graphics/display/20130820222639_AMD_to_Start_Shipping_Next_Generation_Graphics_Processors_in_October.html

According Xbit, they will be coming as early as October!

Source: http://www.xbitlabs.com/news/graphics/display/20130820222639_AMD_to_Start_Shipping_Next_Generation_Graphics_Processors_in_October.html

Geforce GTX 790 and Titan Ultra? Possibly in the works.

There have been a couple of rumors that the 790 was in the works, in addition to the massive amount of hope for a dual GK110 from the community, myself included. I can't lie, it would beat my 690s into a pulp, but I think it would be an engineering feat to get them sandwiched together.

Rumors coming out of Fudzilla state that the 790 is in the works (GK110s) and possible a proper Tesla unit, Titan Ultra, with higher clock rates and all SMXs working.

Fudzilla: http://www.fudzilla.com/home/item/32360-nvidia-reportedly-working-on-two-new-cards

VideoCardz believe there is a Titan Ultra in the works, but their belief is that it is a 790 rather than two individual cards being worked on.

VideoCardz: http://videocardz.com/45403/nvidia-to-launch-more-cards-this-year-maxwell-in-q1-2014

Not to mention nVidia's official plans include Maxwell around the end of Q1, 2014. Rumors also include Radeon HDs have been pushed closer to production (to as soon as November?!).

What both rumors don't tell you however, it doesn't seem super likely to be running 20nm if true, and if not true.... why release more of the same cards? We do know that TSMC still isn't mass producing the smaller fabrication, and by calculations (based off of nVidias GFLOPs vs. Watt Roadmap Slide), it isn't possible to release their intended Maxwell without a die shrink.

With all this focus on the new console generation as well, AMD has been having to spend a lot of its fabrication queues on chips for the Xbox One, PS4, Wii, Wii U, and Xbox 360.

These rumors seem at best wishful think for now, only these two giants really know what is going on.

Rumors coming out of Fudzilla state that the 790 is in the works (GK110s) and possible a proper Tesla unit, Titan Ultra, with higher clock rates and all SMXs working.

Fudzilla: http://www.fudzilla.com/home/item/32360-nvidia-reportedly-working-on-two-new-cards

VideoCardz believe there is a Titan Ultra in the works, but their belief is that it is a 790 rather than two individual cards being worked on.

VideoCardz: http://videocardz.com/45403/nvidia-to-launch-more-cards-this-year-maxwell-in-q1-2014

Not to mention nVidia's official plans include Maxwell around the end of Q1, 2014. Rumors also include Radeon HDs have been pushed closer to production (to as soon as November?!).

What both rumors don't tell you however, it doesn't seem super likely to be running 20nm if true, and if not true.... why release more of the same cards? We do know that TSMC still isn't mass producing the smaller fabrication, and by calculations (based off of nVidias GFLOPs vs. Watt Roadmap Slide), it isn't possible to release their intended Maxwell without a die shrink.

With all this focus on the new console generation as well, AMD has been having to spend a lot of its fabrication queues on chips for the Xbox One, PS4, Wii, Wii U, and Xbox 360.

These rumors seem at best wishful think for now, only these two giants really know what is going on.

Wednesday, August 28, 2013

MSI Geforce GTX 780 3GB Lightning Is Out!

If only I had the cash to splurge... who does anymore? It is very pricey, but it is good to see custom GTX 780 as I predicted. It will definitely give Titans a run a for their money.

PCPER Review: http://www.pcper.com/reviews/Graphics-Cards/MSI-GeForce-GTX-780-3GB-Lightning-Graphics-Card-Review

PCPER Review: http://www.pcper.com/reviews/Graphics-Cards/MSI-GeForce-GTX-780-3GB-Lightning-Graphics-Card-Review

Labels:

Geforce,

Geforce GTX,

Geforce GTX 780,

Lightning,

MSI,

nVidia,

Titan

PS4 vs Xbox One - Pre-Order Totals to August 24th 2013

Up until the week ending August 24 the PlayStation 4 currently leads in the total number of pre-orders in the US with about 600,000. This is up significantly since E3 where there were only 75,000 pre-orders. The Xbox One is still lagging behind with 350,000 pre-orders. This is also way up since E3 where there were only 45,000 pre-orders.

HSA targets native parallel execution in Java virtual machines by 2015

Industry consortium HSA Foundation intends to bring native support for parallel acceleration in Java virtual machines, which would make it easier to tap into multiple processors like graphics processors to speed up code execution.

Source: http://www.pcworld.com/article/2047422/hsa-targets-native-parallel-execution-in-java-virtual-machines-by-2015.html

Xbox One November 8th Release Date Debunked

The source says to expect the console at a date later than November 8, though obviously still in the month of November, as that's the release window Microsoft has promised for some time.Source: http://kotaku.com/the-xbox-one-will-not-launch-on-november-8-after-all-1211069173

PS4 / Xbox One Potential Release Dates

Microsoft are being tight lipped about their release date, the rumors have been pointing to around November 8th, a Friday, and at midnight. This is interesting because the PS4 release date is supposed to be November 15th, a Friday, and at midnight.

PS4 goes on sale internationally two weeks after:

Xbox One Date Source: http://www.denofgeek.com/games/xbox-one/27042/xbox-one-to-release-ahead-of-ps4

Xbox One Source 2: http://kotaku.com/the-xbox-one-might-be-out-on-november-8-1204282442

PS4 International Date Source: http://www.latinpost.com/articles/1690/20130827/ps4-release-date-price-specs-overseas-market-debut-two-weeks.htm

PS4 Source 2: http://www.hypable.com/2013/08/20/ps4-release-date-revealed/

PS4 goes on sale internationally two weeks after:

Xbox One Date Source: http://www.denofgeek.com/games/xbox-one/27042/xbox-one-to-release-ahead-of-ps4

Xbox One Source 2: http://kotaku.com/the-xbox-one-might-be-out-on-november-8-1204282442

PS4 International Date Source: http://www.latinpost.com/articles/1690/20130827/ps4-release-date-price-specs-overseas-market-debut-two-weeks.htm

PS4 Source 2: http://www.hypable.com/2013/08/20/ps4-release-date-revealed/

PS4 vs. Xbox One - The Bribery! Gamestop managers spoiled and a white Xbox One?

I first heard about this yesterday and wasn't going to post anything, however, it is obviously a little funnier now that both Sony and Microsoft are one upping each other.

At least Microsoft is back in the fight.

Shortly afterwards, we all heard this notice:

Also mentioned was that the Microsoft employees will receive a white Xbox One:

I personally like the look of this much better, especially considering White is the new black, and looks more in common with Xbox 360 than a TiVO.

Source: http://www.engadget.com/2013/08/26/white-xbox-one/

GameStop managers will receive PlayStation 4 units and seven games at launch, GameStop has confirmed to Joystiq. The announcement was made at the company's GameStop EXPO, currently taking place in Las Vegas.

A tipster informs us the games to be given with the console include: Killzone: Shadowfall, NBA 2K14, Madden, FIFA 14, Need for Speed: Rivals, Battlefield 4 and Beyond: Two Souls. That final one being a curiosity if we're to assume managers will receive PS4 versions of the listed games, since to the best of our knowledge, and reiterated by Sony in follow-up,Beyond is only for PS3.Source: http://www.joystiq.com/2013/08/26/gamestop-managers-to-receive-ps4-seven-games/

Shortly afterwards, we all heard this notice:

Turns out, in addition to a PlayStation 4, GameStop managers will also receive an Xbox One this holiday season. The announcement was made at the retailers' expo in Las Vegas a few hours after it revealed managers would receive a PS4 and seven games.Source: http://www.joystiq.com/2013/08/27/gamestop-managers-to-receive-xbox-one/

Neowin reached out to GameStop and the company confirmed the detail. The Xbox One console will be given to all the general managers of the company's 6,500 stores, whether or not they attended the expo. No word on whether it will also include software.

Also mentioned was that the Microsoft employees will receive a white Xbox One:

I personally like the look of this much better, especially considering White is the new black, and looks more in common with Xbox 360 than a TiVO.

Source: http://www.engadget.com/2013/08/26/white-xbox-one/

PS4 Full Hardware Capabilities will take 3 to 4 years to Surface

Sony's Playstation 4 has already been reported to have some nifty performance incentives over the Xbox One. The PS4 vs. Xbox One breakdown I did is here.

According to Michael Cerny, PS4's lead architect:

Source: http://www.gamespot.com/news/cerny-full-hardware-capabilities-of-ps4-may-take-three-to-four-years-to-be-harnessed-6413767

According to Michael Cerny, PS4's lead architect:

"It's a supercharged PC architecture, so you can use it as if it were a PC with unified memory," he said. "Much of what we're seeing with the launch titles is that usage; it's very, very quick to get up to speed if that's how you use it. But at the same time, then you're not taking advantage of all the customisation that we did in the GPU. I think that really will play into the graphical quality and the level of interaction in the worlds in, say, year three or year four of the console."By my experience, one could infer that he means the second and third round of PS4 AAA games will unveil the majority of the potential of the console.

Source: http://www.gamespot.com/news/cerny-full-hardware-capabilities-of-ps4-may-take-three-to-four-years-to-be-harnessed-6413767

AMD Radeon HD 7000 Series getting DX11.2 Support. Kepler Support Better Explained.

Usually between revisions of DirectX, such as from 10 to 11, there are major changes in the underlying API/code. With the latest service packs, patches, what not, this has not normally been the case.

DX11.1 and 11.2 were mostly software side optimizations, however, the Radeons do have full hardware support even for the optional feature sets in DX11.1/2 (optional as declared by Microsoft not nVidia ;) In AMDs case, they really could be driver side supported. AMD is showing this by releasing support for up and coming DX11.2 (via Windows 8.1) in a driver update.

Pcper: http://www.pcper.com/news/Graphics-Cards/GCN-Based-AMD-7000-Series-GPUs-Will-Fully-Support-DirectX-112-After-Driver-Updat

MaximumPC: http://www.maximumpc.com/driver_update_will_add_directx_112_support_amd_radeon_hd_7000_series_gpus

What AMD stated is pretty much true, roughly 90% was software side and they have the ability to support the extra 10% with dedicated hardware.

According BON (Brightside of the News), who first reported that the HD 7000 series supposedly would support DX11.1 by hardware, nVidia weighed in on the situation then. There were four features left out, on Kepler's side, that nVidia stated they didn't think they pertained to gaming.

nVidia's Quote:

From my understanding, I looked up what exactly was Microsoft's naming convention. It looks like 11_0 was released with DirectX 11. Code specific to ONLY 11.1 was deemed 11_1. They then went on to state that they do support the 11_0 (which is apart of DX11.1) but not 11_1 via the 10% that require specific hardware. It is somewhat confusing on Microsoft's side, but to me it seems nVidia is trying a little damage control.

So to my original statement, most of the DX11.1 was software updates, therefore, should run on DX11.0 hardware. You can not do the same for an entire family revision (ex DX12). These DX11_1 features not included via hardware, could very well be included with work around for developers in the driver (call this function for this effect etc.). The same can be said of the DX11_2 release. There is not much in the way of substantial change.

Source: http://www.brightsideofnews.com/news/2012/11/21/nvidia-doesnt-fully-support-directx-111-with-kepler-gpus2c-bute280a6.aspx

It is all still rather more straightforward on AMDs side the nVidia, but I did find this immensely helpful breakdown on Anandtechs Forums.

Comparison of DX11/11.1 Support. Source: http://forums.anandtech.com/showpost.php?p=35396249&postcount=14

DX11.1 and 11.2 were mostly software side optimizations, however, the Radeons do have full hardware support even for the optional feature sets in DX11.1/2 (optional as declared by Microsoft not nVidia ;) In AMDs case, they really could be driver side supported. AMD is showing this by releasing support for up and coming DX11.2 (via Windows 8.1) in a driver update.

"The Radeon HD 7000 series hardware architecture is fully DirectX 11.2-capable when used with a driver that enables this feature. AMD is planning to enable DirectX 11.2 with a driver update in the Windows 8.1 launch timeframe in October, when DirectX 11.2 ships. Today, AMD is the only GPU manufacturer to offer fully-compatible DirectX 11.1 support, and the only manufacturer to support Tiled Resources Tier-2 within a shipping product stack.”

Pcper: http://www.pcper.com/news/Graphics-Cards/GCN-Based-AMD-7000-Series-GPUs-Will-Fully-Support-DirectX-112-After-Driver-Updat

MaximumPC: http://www.maximumpc.com/driver_update_will_add_directx_112_support_amd_radeon_hd_7000_series_gpus

What AMD stated is pretty much true, roughly 90% was software side and they have the ability to support the extra 10% with dedicated hardware.

According BON (Brightside of the News), who first reported that the HD 7000 series supposedly would support DX11.1 by hardware, nVidia weighed in on the situation then. There were four features left out, on Kepler's side, that nVidia stated they didn't think they pertained to gaming.

nVidia's Quote:

"The GTX 680 supports DirectX 11.1 with hardware feature level 11_0, including all optional features. This includes a number of features useful for game developers such as:

- Partial constant buffer updates

- Logic operations in the Output Merger

- 16bpp renderingUAV-only rendering

- Partial clearsLarge constant buffers

We did not enable four non-gaming features in Hardware in Kepler (for 11_1):

- Target-Independent Rasterization (2D rendering only)

- 16xMSAA Rasterization (2D rendering only)

- Orthogonal Line Rendering Mode

- UAV in non-pixel-shader stages

So basically, we do support 11.1 features with 11_0 feature level through the DirectX 11.1 API. We do not support feature level 11_1. This is a bit confusing, due to Microsoft naming. So we do support 11.1 from a feature level for gaming related features."

From my understanding, I looked up what exactly was Microsoft's naming convention. It looks like 11_0 was released with DirectX 11. Code specific to ONLY 11.1 was deemed 11_1. They then went on to state that they do support the 11_0 (which is apart of DX11.1) but not 11_1 via the 10% that require specific hardware. It is somewhat confusing on Microsoft's side, but to me it seems nVidia is trying a little damage control.

So to my original statement, most of the DX11.1 was software updates, therefore, should run on DX11.0 hardware. You can not do the same for an entire family revision (ex DX12). These DX11_1 features not included via hardware, could very well be included with work around for developers in the driver (call this function for this effect etc.). The same can be said of the DX11_2 release. There is not much in the way of substantial change.

Source: http://www.brightsideofnews.com/news/2012/11/21/nvidia-doesnt-fully-support-directx-111-with-kepler-gpus2c-bute280a6.aspx

It is all still rather more straightforward on AMDs side the nVidia, but I did find this immensely helpful breakdown on Anandtechs Forums.

OK. Enough with this kind of misunderstanding.

Let's see the facts:

- DX11.2 will be supported by any DX11 or DX11.1 GPUs.

- Tiled Resources support is not mandatory, it's just an option.

I created a list that contains the most relevant gaming features in DX11.1/11.2 and the hardware support for it.

Mandatory features:

Logic operations in the Output Merger:

NVIDIA Kepler: Yes

NVIDIA Fermi: Yes

AMD GCN: Yes

AMD VLIW4/5: Yes

Intel Gen7.5: Yes

Intel Gen7: No

UAV-only rendering:

NVIDIA Kepler: Yes

NVIDIA Fermi: Yes

AMD GCN: Yes

AMD VLIW4/5: Yes

Intel Gen7.5: Yes

Intel Gen7: Yes

UAV in non-pixel-shader stages:

NVIDIA Kepler: No (technically yes, but the DX limit this option in feature_level_11_0)

NVIDIA Fermi: No (technically yes, but the DX limit this option in feature_level_11_0)

AMD GCN: Yes

AMD VLIW4/5: No (technically yes, but the DX limit this option in feature_level_11_0)

Intel Gen7.5: Yes

Intel Gen7: No

Larger number of UAVs:

NVIDIA Kepler: No (8)

NVIDIA Fermi: No (8)

AMD GCN: Yes (64)

AMD VLIW4/5: No (8)

Intel Gen7.5: Yes (64)

Intel Gen7: No (8)

HLSL FLG:

NVIDIA Kepler: Yes

NVIDIA Fermi: Yes

AMD GCN: Yes

AMD VLIW4/5: Yes

Intel Gen7.5: Yes

Intel Gen7: Yes

------------------------------------------

Optional features:

Tiled Resources support:

NVIDIA Kepler: Yes (tier1)

NVIDIA Fermi: No (tier0)

AMD GCN: Yes (tier2)

AMD VLIW4/5: No (tier0)

Intel Gen7.5: no (tier0)

Intel Gen7: No (tier0)

Feature level support:

NVIDIA Kepler: 11_0

NVIDIA Fermi: 11_0

AMD GCN: 11_1

AMD VLIW4/5: 11_0

Intel Gen7.5: 11_1

Intel Gen7: 11_0

Conservative Depth Output:

NVIDIA Kepler: No

NVIDIA Fermi: No

AMD GCN: Yes

AMD VLIW4/5: Yes

Intel Gen7.5: No

Intel Gen7: No

Comparison of DX11/11.1 Support. Source: http://forums.anandtech.com/showpost.php?p=35396249&postcount=14

Tuesday, August 27, 2013

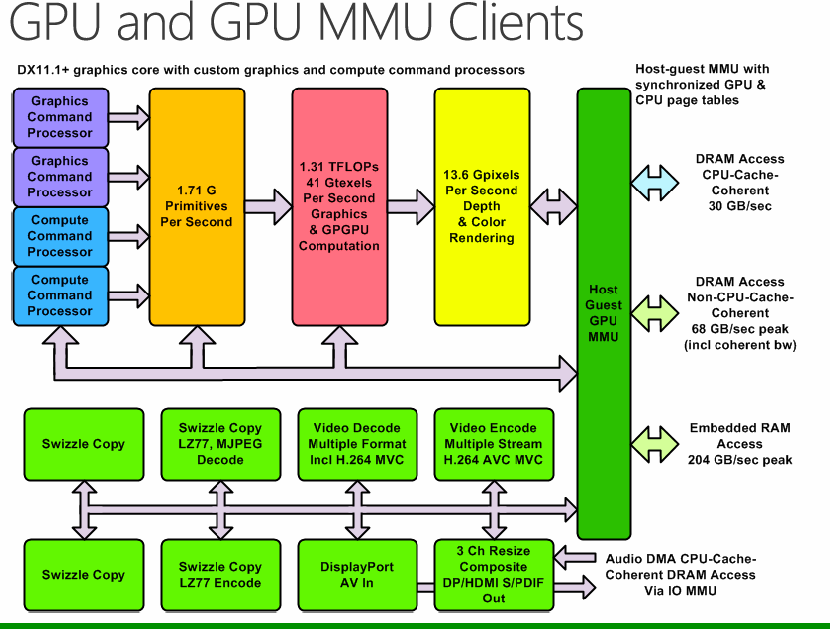

Xbox One Technical Specifications Laid Out In More Detail

Microsoft unveiled some of the power of their new Xbox One.

"In talking up the innards of its upcoming gaming console, Microsoft didn’t reveal any new capabilities of the One—due this November—during a presentation at the Hot Chips conference Monday at Stanford University. But company executives discussed the so-called “speeds and feeds” of the main Xbox One processor in detail, as well as the problems that the new Kinect team had to overcome."

Source: http://www.pcworld.com/article/2047482/xbox-ones-massive-custom-cpu-can-pick-you-out-of-a-lineup.html

Notable pieces of information, were some of the new slides on the technical specs of the APU/SOC (System on a Chip).

Image Source: TechHive, http://images.techhive.com/images/article/2013/08/xbox-one-gpu-diagram-100051501-orig.png

Makes it a pretty formidable SoC, however, based on the calculations of the GPU, it still puts it inline with an HD 7780 (between a HD 7770 and 7790.)

For those Kinect enthusiasts, there was information for you too:

"The image sensor chip also controls a 1080p camera that can capture video for Skype or other purposes. Kinect now has a 70-degree viewing angle and can detect movement in larger spaces, ranging from around 0.8 meters to 4.2 meters away. Now it also doesn’t matter if the lighting in the room is good or poor. Kinect can also detect up to six players at a time now"

Source: http://venturebeat.com/2013/08/26/microsoft-disclosed-details-for-the-main-processor-and-the-kinect-image-processor-in-the-xbox-one-video-game-console-today-at-a-chip-design-conference-those-details-are-critical-for-the-kind-of-expe/#CHmQsWBiLPgyKgSy.99

Another question a few of us had, was the actual architecture of the Jaguar core being x86, x64, or x86-64? Turns out the Jaguar cores are x64, according to Venturebeat.

Thursday, August 22, 2013

PS4 vs. Xbox One - Architecture Break Down

UPDATED: At bottom.

Despite Microsoft turning the Xbox One to a Xbox 180 of its policies, is there a real difference between the PS4 and Xbox One? The answer to that question is a resounding yes.

PS4 will be using HSA / HUMA. It will be the first known closed system to implement HSA/HUMA from a commercial aspect. Many of us have been fantasizing about the potentials of HSA/HUMA, but now we finally get to see it in action.

HSA / HUMA from AMD:

http://www.amd.com/us/products/technologies/hsa/Pages/hsa.aspx#3

Source for PS4 having HSA / HUMA and very good break down of HSA / HUMA:

NeoGaf: http://www.neogaf.com/forum/showpost.php?p=77493461&postcount=1

Just a note though: since the architecture is almost identical for the Xbox, HSA and HUMA may very well potentially get implemented on their side.

Is that all there is to the differences? No absolutely not. (THE TL;DR is at the bottom).

Lets get to the APU.

The APU in question is the AMD Jaguar. A octo-core (probably faux octo-core in where it has 8 integer processing units and 4 floating point processing units). Both systems have a variant of this, the detailed specifics are still missing.

The PS4 GPU inside the APU is different too. It is better, in terms of performance and shader count. Microsoft have already announced a frequency boost to make it "competitive." At this point in the game, my experience tells me they stumbled on performance and are trying to make it up with frequency speed.

There has been a lot of downplay on the PS4's superiority. I call this marketing bullshit.

The PS4 GPU has approximately 1152 Shader Count, or more than like "Stream Processors" utilizing the GCN architecture. The estimated GPU throughput is at 1.84 TFLOPs.

For Comparison's sake, the AMD Radeon HD 7850 (Desktop not Mobile) GPU has 1,024 Steam Processors, and 1.76TFLOPs of performance. The memory bandwidth is 153.6 GB/s. An HD 7870 has 1,280 Stream Processors, and 2.56 TFLOPS, with 153.6 GB/s of memory bandwidth.

That would put the paper/theoretical perforamnce of the PS4 somewhere between an HD 7850 to 7870. There is also the factor of D2M Programming and HUMA. D2M is direct to metal programming, code for specific hardware runs so much better than generic or non-specific code. While the theoretical performance maybe an HD 7860 (not a real card) the actual performance maybe higher and closer to a 7950. Especially with the type of memory it has: 8GB GDDR5 (not sure what speed yet.) This gives it the potential for 176.0 GB/s Memory Bandwidth @ the rumored 5500 MHz.

The Xbox One on the other hand, will have 768 Shader/Steam Processors @ 1.23 TFLOPs, 32MB of eSRAM (rumored to be between 100 GB/s to 200), and 8GB of DDR3 @ 2133~2300 MHz (about 50~65 GB/s) Now memory bandwidth won't really be a limitation to either side this time, and while on paper the Xbox One looks to finesse about the same performance as the PS4, the PS4 will result in the smoother experience (at least at the start.)

Microsoft claims that it has a theoretical output of 192 GB/s but what I calculated was closer to 133 GB/s due to the speed of the slowest component bottle necking performance.

The comparable desktop GPU to the Xbox One's would be between an HD 7770 to a 7790. A moderate downgrade over a theoretical HD 7860 by any standards.

Summary (TL;DR):

The CPU Performance should be on par with each other, in favor of the Xbox One (cloud potential.)

The CPU while important, is not the primary ingredient in driving video games, its the GPU.

PS4 GPU: XBox One GPU:

Processors: 1152 Processors: 768

TFLOPS: 1.84 TFLOPS: 1.23 TFLOPS

Memory: 8GB GDDR5 Memory: 8GB DDR3 w/ 32MB eSRAM

Mem Band: 176.0GB/s Mem Band: 68.3 GB/s w/ 102 GB/s ~ 133GB/s with overhead/bottle neck.

Xbox One CPU has the potential for 3 VMs per machine providing CPU performance.

I honestly can't see how this will help video games specifically though, but cool none the less.

PS4 will use HSA/HUMA, very cool.

PS4 GPU Processors has 50% more processors.

PS4 TFLOPS is about 49.59% higher.

Equal capacity in memory.

Memory Bandwidth (should be good enough for both systems.)

Potentially equal bandwidth on memory or more according to Microsoft (+9%)

By my calculations/estimate the PS4 has 32.33% more bandwidth.

PS4 HDD can be replaced by end-users (SSD here I come!)

While, both systems should be bought based off the games you wish to play (i.e. exclusives :( ), there shouldn't be any question to which has the beefier hardware. The PS4 has roughly 50% more raw GPU power than the Xbox One.

Eurogamer.net did a very detailed breakdown as well!

http://www.eurogamer.net/articles/digitalfoundry-can-xbox-one-multi-platform-games-compete-with-ps4

UPDATE:

I have followed the fiasco today with PCPER vs. VRZONE.

PCPER thought they debunked it: http://www.pcper.com/news/General-Tech/Sony-PlayStation-4-PS4-will-NOT-utilize-AMD-hUMA-Kabini-based-SoC?utm_source=twitterfeed&utm_medium=twitter

VRZONE has confirmed HSA: http://vr-zone.com/articles/xbox-dev-xbox-one-answer-ps4s-huma-technology/52662.html#ixzz2cjmZ3miR

AMD's current stance seems to be they can't confirm nor deny now, although they were the original source of the confusion.

Despite Microsoft turning the Xbox One to a Xbox 180 of its policies, is there a real difference between the PS4 and Xbox One? The answer to that question is a resounding yes.

PS4 will be using HSA / HUMA. It will be the first known closed system to implement HSA/HUMA from a commercial aspect. Many of us have been fantasizing about the potentials of HSA/HUMA, but now we finally get to see it in action.

HSA / HUMA from AMD:

http://www.amd.com/us/products/technologies/hsa/Pages/hsa.aspx#3

Source for PS4 having HSA / HUMA and very good break down of HSA / HUMA:

NeoGaf: http://www.neogaf.com/forum/showpost.php?p=77493461&postcount=1

Just a note though: since the architecture is almost identical for the Xbox, HSA and HUMA may very well potentially get implemented on their side.

Is that all there is to the differences? No absolutely not. (THE TL;DR is at the bottom).

Lets get to the APU.

The APU in question is the AMD Jaguar. A octo-core (probably faux octo-core in where it has 8 integer processing units and 4 floating point processing units). Both systems have a variant of this, the detailed specifics are still missing.

The PS4 GPU inside the APU is different too. It is better, in terms of performance and shader count. Microsoft have already announced a frequency boost to make it "competitive." At this point in the game, my experience tells me they stumbled on performance and are trying to make it up with frequency speed.

There has been a lot of downplay on the PS4's superiority. I call this marketing bullshit.

The PS4 GPU has approximately 1152 Shader Count, or more than like "Stream Processors" utilizing the GCN architecture. The estimated GPU throughput is at 1.84 TFLOPs.

For Comparison's sake, the AMD Radeon HD 7850 (Desktop not Mobile) GPU has 1,024 Steam Processors, and 1.76TFLOPs of performance. The memory bandwidth is 153.6 GB/s. An HD 7870 has 1,280 Stream Processors, and 2.56 TFLOPS, with 153.6 GB/s of memory bandwidth.

That would put the paper/theoretical perforamnce of the PS4 somewhere between an HD 7850 to 7870. There is also the factor of D2M Programming and HUMA. D2M is direct to metal programming, code for specific hardware runs so much better than generic or non-specific code. While the theoretical performance maybe an HD 7860 (not a real card) the actual performance maybe higher and closer to a 7950. Especially with the type of memory it has: 8GB GDDR5 (not sure what speed yet.) This gives it the potential for 176.0 GB/s Memory Bandwidth @ the rumored 5500 MHz.

The Xbox One on the other hand, will have 768 Shader/Steam Processors @ 1.23 TFLOPs, 32MB of eSRAM (rumored to be between 100 GB/s to 200), and 8GB of DDR3 @ 2133~2300 MHz (about 50~65 GB/s) Now memory bandwidth won't really be a limitation to either side this time, and while on paper the Xbox One looks to finesse about the same performance as the PS4, the PS4 will result in the smoother experience (at least at the start.)

Microsoft claims that it has a theoretical output of 192 GB/s but what I calculated was closer to 133 GB/s due to the speed of the slowest component bottle necking performance.

The comparable desktop GPU to the Xbox One's would be between an HD 7770 to a 7790. A moderate downgrade over a theoretical HD 7860 by any standards.

Summary (TL;DR):

The CPU Performance should be on par with each other, in favor of the Xbox One (cloud potential.)

The CPU while important, is not the primary ingredient in driving video games, its the GPU.

PS4 GPU: XBox One GPU:

Processors: 1152 Processors: 768

TFLOPS: 1.84 TFLOPS: 1.23 TFLOPS

Memory: 8GB GDDR5 Memory: 8GB DDR3 w/ 32MB eSRAM

Mem Band: 176.0GB/s Mem Band: 68.3 GB/s w/ 102 GB/s ~ 133GB/s with overhead/bottle neck.

Xbox One CPU has the potential for 3 VMs per machine providing CPU performance.

I honestly can't see how this will help video games specifically though, but cool none the less.

PS4 will use HSA/HUMA, very cool.

PS4 GPU Processors has 50% more processors.

PS4 TFLOPS is about 49.59% higher.

Equal capacity in memory.

Memory Bandwidth (should be good enough for both systems.)

Potentially equal bandwidth on memory or more according to Microsoft (+9%)

By my calculations/estimate the PS4 has 32.33% more bandwidth.

PS4 HDD can be replaced by end-users (SSD here I come!)

While, both systems should be bought based off the games you wish to play (i.e. exclusives :( ), there shouldn't be any question to which has the beefier hardware. The PS4 has roughly 50% more raw GPU power than the Xbox One.

Eurogamer.net did a very detailed breakdown as well!

http://www.eurogamer.net/articles/digitalfoundry-can-xbox-one-multi-platform-games-compete-with-ps4

UPDATE:

I have followed the fiasco today with PCPER vs. VRZONE.

PCPER thought they debunked it: http://www.pcper.com/news/General-Tech/Sony-PlayStation-4-PS4-will-NOT-utilize-AMD-hUMA-Kabini-based-SoC?utm_source=twitterfeed&utm_medium=twitter

VRZONE has confirmed HSA: http://vr-zone.com/articles/xbox-dev-xbox-one-answer-ps4s-huma-technology/52662.html#ixzz2cjmZ3miR

AMD's current stance seems to be they can't confirm nor deny now, although they were the original source of the confusion.

Wednesday, August 21, 2013

WinPE / BCDBOOT: Failure when attempting to copy boot files. (Solution)

From time to time, when repairing an MBR or just applying BCDBOOT for a new image, you may occasionally get this error: Failure when attempting to copy boot files.

When this happens, your new image or computer will not boot from hard drive.

Some may have investigated that even further and discovered you can "force" BCDBOOT with /S command to your drive and it works correctly. When this happens, your new image still may not boot! Why?

Sometimes it will work and sometimes it won't work seemingly random. It isn't necessarily the same machine, not necessarily the same OS, or OS version. What gives? I have finally discovered for myself what the hell is wrong with this thing.

The trick I found out was HOW I was getting into WinPE or PXE. If I am doing a LEGACY boot of the USB (WINPE), BCDBOOT would work successfully. If I am doing a UEFI/EFI boot WinPE environment, it would not work! Puzzling, but at least I know how to fix this!

Basically what happens:

If you boot your WinPE drive via UEFI interface / options, BCDBOOT will default to try working with GPT and EFI firmware. With an EFI reserve partition missing because it wasn't created you get: Failure when attempting to copy boot files.

If you boot your WinPE drive via BIOS / LEGACY options, BCDBOOT will default to try working with MBR and BIOS firmware.

That's okay if you can't or don't want to run LEGACY!

The EXTRA commands need to be thus for generating the old MBR style drive!

Step One:

Your Windows partition is still active. We will call it W:\

Step Two:

Your System partition exists! We will call it S:\

Step Three:

You have applied your image (or the location of Windows already exists.) to W:\

Step Four:

Command to finalize the MBR (under UEFI mode) is this:

bcdboot W:\Windows /s S: /f BIOS

Conversely, if you are booting non-EFI to USB drive, but your drive is GPT and UEFI you will want to run this:

bcdboot W:\Windows /s S: /f EFI

Where S: in this case is the EFI partition, necessary, for those drives.

Hope that helps!

When this happens, your new image or computer will not boot from hard drive.

Some may have investigated that even further and discovered you can "force" BCDBOOT with /S command to your drive and it works correctly. When this happens, your new image still may not boot! Why?

Sometimes it will work and sometimes it won't work seemingly random. It isn't necessarily the same machine, not necessarily the same OS, or OS version. What gives? I have finally discovered for myself what the hell is wrong with this thing.

The trick I found out was HOW I was getting into WinPE or PXE. If I am doing a LEGACY boot of the USB (WINPE), BCDBOOT would work successfully. If I am doing a UEFI/EFI boot WinPE environment, it would not work! Puzzling, but at least I know how to fix this!

Basically what happens:

If you boot your WinPE drive via UEFI interface / options, BCDBOOT will default to try working with GPT and EFI firmware. With an EFI reserve partition missing because it wasn't created you get: Failure when attempting to copy boot files.

If you boot your WinPE drive via BIOS / LEGACY options, BCDBOOT will default to try working with MBR and BIOS firmware.

That's okay if you can't or don't want to run LEGACY!

The EXTRA commands need to be thus for generating the old MBR style drive!

Step One:

Your Windows partition is still active. We will call it W:\

Step Two:

Your System partition exists! We will call it S:\

Step Three:

You have applied your image (or the location of Windows already exists.) to W:\

Step Four:

Command to finalize the MBR (under UEFI mode) is this:

bcdboot W:\Windows /s S: /f BIOS

Conversely, if you are booting non-EFI to USB drive, but your drive is GPT and UEFI you will want to run this:

bcdboot W:\Windows /s S: /f EFI

Where S: in this case is the EFI partition, necessary, for those drives.

Hope that helps!

AMD Catalyst Driver 13.8 Beta 2 (Microstutter Fixing / CFX Drivers)

Sorry for the delay, AMD's second driver attempt to reduce microstutter in GPUs is now out. Link below.

Feature Highlights of The AMD Catalyst 13.8 Beta2 Driver for Windows:

Saints Row 4: Performance improves up to 25% at 1920x1280 with Ultra settings enabled

Splinter Cell Blacklist: Performance improves up to 9% at 2560x1600 with Ultra settings enabled

Final Fantasy XIV: Improves single GPU and CrossFire performance

Van Helsing: Fixes image quality issues when enabling Anti-Aliasing through the AMD Catalyst Control Center

Far Cry 3 / Far Cry 3 Blood Dragon: Resolve corruption when enabling Anti-Aliasing through the Catalyst Control Center

RIPD: Improves single GPU performance

Minimum: Improves CrossFire performance

Castlevania Lords of Shadow: Improves CrossFire performance

CrossFire: Frame Pacing feature – includes improved performance in World of Warcraft, Sniper Elite, Watch Dogs, and Tomb Raider

Doom 3 BFG: Corruption issues have been resolved

Known Issues of The AMD Catalyst 13.8 Beta2 Driver for Windows:

CrossFire configurations (when used in combination with Overdrive) can result in the secondary GPU usage running at 99%

Enabling CrossFire can result in the PCI-e bus speed for the secondary GPU being reported as x1

Bioshock Infinite: New DLC can cause system hangs with Frame Pacing enabled – disable frame pacing for this title to resolve.

http://support.amd.com/us/kbarticles/Pages/AMDCatalyst13-8WINBetaDriver.aspx

Saturday, May 11, 2013

GTX 780M Benchmarks (Laptop, not desktop GPU)

Some leaked benchmarks can be seen. Not too shabby for a laptop GK104 modified chip. These would be the same as the 6xx family in nVidia.

Battlefield 3 for example:

Source: http://www.game-debate.com/news/?news=5440&graphics

Battlefield 3 for example:

Source: http://www.game-debate.com/news/?news=5440&graphics

Monday, May 6, 2013

Xbox 3/720 Durango is going to potentially have Offline support.

"Durango [the codename for the next Xbox] is designed to deliver the future of entertainment while engineered to be tolerant of today's Internet." It continues, "There are a number of scenarios that our users expect to work without an Internet connection, and those should 'just work' regardless of their current connection status. Those include, but are not limited to: playing a Blu-ray disc, watching live TV, and yes playing a single player game."

It is very similar in idea to Steams activation and offline play modes. It is good for everyone, however, I would love to not be connected and still have games work right out of the box.

Source: http://arstechnica.com/gaming/2013/05/microsoft-next-xbox-will-work-even-when-your-internet-doesnt/

The funny thing I noticed was first, pointed out by ArsTechnica, was that this was distributed in an internal email at Microsoft. If it is to be believed, they are sending out a mass email confirming that things have not changed (i.e. there is an offline mode.) Is it just me or is it odd you would send an email update stating that nothing has changed and to re-iterate the offline mode? I do think its odd, and honestly, the amount of backlash Microsoft would get for this is pretty harsh. With the less than enthusiastic reception of Windows 8, it seems Microsoft may have lost touch with its consumer base.

Tie this in with the firing of Adam Orth after his comments pissed off the majority of the gaming community (even ones that don't mind always online devices) by telling them to "#dealwithit" on Twitter. His main contention that having internet is like having electricity and running water. Which it isn't, and when the economy is in the state it is, some people have forgone home internet. To be honest, if they chose this route for a console, that is their prerogative, and I can understand it (not necessarily like it or desire it, but understand it for sure.) The way Adam came off though to me this sounded like a spoiled brat who should know better. It also makes me wonder if his opinion is shared at Microsoft, if just not publicly. This wasn't some lowly Microsoft peon after all, it was Microsoft's Game Director.

Source: http://www.theverge.com/2013/4/10/4210870/adam-orth-leaves-microsoft

Source: http://www.theverge.com/2013/4/5/4185938/adam-orth-speaks-on-required-internet-connection-for-durango-rumors

It is very similar in idea to Steams activation and offline play modes. It is good for everyone, however, I would love to not be connected and still have games work right out of the box.

Source: http://arstechnica.com/gaming/2013/05/microsoft-next-xbox-will-work-even-when-your-internet-doesnt/

The funny thing I noticed was first, pointed out by ArsTechnica, was that this was distributed in an internal email at Microsoft. If it is to be believed, they are sending out a mass email confirming that things have not changed (i.e. there is an offline mode.) Is it just me or is it odd you would send an email update stating that nothing has changed and to re-iterate the offline mode? I do think its odd, and honestly, the amount of backlash Microsoft would get for this is pretty harsh. With the less than enthusiastic reception of Windows 8, it seems Microsoft may have lost touch with its consumer base.

Tie this in with the firing of Adam Orth after his comments pissed off the majority of the gaming community (even ones that don't mind always online devices) by telling them to "#dealwithit" on Twitter. His main contention that having internet is like having electricity and running water. Which it isn't, and when the economy is in the state it is, some people have forgone home internet. To be honest, if they chose this route for a console, that is their prerogative, and I can understand it (not necessarily like it or desire it, but understand it for sure.) The way Adam came off though to me this sounded like a spoiled brat who should know better. It also makes me wonder if his opinion is shared at Microsoft, if just not publicly. This wasn't some lowly Microsoft peon after all, it was Microsoft's Game Director.

Source: http://www.theverge.com/2013/4/10/4210870/adam-orth-leaves-microsoft

Source: http://www.theverge.com/2013/4/5/4185938/adam-orth-speaks-on-required-internet-connection-for-durango-rumors

Nexus 5 Image Leak? Leak suggests LG will be making the next Nexus.

Looks like Google maybe sticking with LG for the Nexus 5! Which is good because the Nexus 4 was a killer phone with very few issues.

Source: http://hexus.net/mobile/news/android/54893-lg-now-working-google-nexus-5-smartphone/

This also is interesting because if true, this earlier prototype of Nexus 5 maybe incorrect. It is something only time will tell.

Source: http://hexus.net/mobile/news/android/53049-google-lg-nexus-5-prototype-leaked-specs-images/

Source: http://hexus.net/mobile/news/android/54893-lg-now-working-google-nexus-5-smartphone/

This also is interesting because if true, this earlier prototype of Nexus 5 maybe incorrect. It is something only time will tell.

Source: http://hexus.net/mobile/news/android/53049-google-lg-nexus-5-prototype-leaked-specs-images/

LucasArts revived by... EA?

EA's Frank Gibeau (President of EA Label): "the games will be entirely original with all new stories and gameplay.... may borrow from films"

Engine to be used: Frostbite 3 (ala BF4).

Source: http://www.theverge.com/2013/5/6/4306040/electronic-arts-gets-exclusive-deal-to-develop-star-wars-video-games

Source: http://www.ea.com/news/ea-and-disney-team-up-on-new-star-wars-games

Engine to be used: Frostbite 3 (ala BF4).

Source: http://www.theverge.com/2013/5/6/4306040/electronic-arts-gets-exclusive-deal-to-develop-star-wars-video-games

Source: http://www.ea.com/news/ea-and-disney-team-up-on-new-star-wars-games

GTX 760M Specifications Leaked/Rumored

Judging by the specs and fabrication (looking to be GK106/107) I would anticipate the performance to be pretty impressive for a laptop GPU but more than that, its going to run a lot cooler (assuming their is some not crummy cooling system in the laptop.)

Image courtesy of ChipHell forums.

Other specs include the 750M, 745M, 740M. The 780M and 770M should already be out, but also mentioned in the article is some love for Team Red, the HD8970M is expected later this year. No specs other than it may be a rebrand of the HD7970M which would be a little lame if true.

Source: http://wccftech.com/nvidia-geforce-gtx-760m-gpuz-revealed-features-gk106-768-cores/

Image courtesy of ChipHell forums.

Other specs include the 750M, 745M, 740M. The 780M and 770M should already be out, but also mentioned in the article is some love for Team Red, the HD8970M is expected later this year. No specs other than it may be a rebrand of the HD7970M which would be a little lame if true.

Source: http://wccftech.com/nvidia-geforce-gtx-760m-gpuz-revealed-features-gk106-768-cores/

Saturday, May 4, 2013

GTX 780 is possibly Titan LE and releasing the 23rd, according to leaks.

The Geforce GTX Titan is based off the K20 Tesla units of nVidia's high end computing cards. Titan is in fact slightly slower than a Tesla due to few key laser cuts to die. More than likely, these chips did not qualify as full Tesla cards, but were not complete failures. Not broken but not fully yielding Teslas. The GK110 is a powerful chip and frankly it looks like the GTX 780 is going to be the Titan LE, also a GK110, but again a little weaker than a Titan.

GTX 780 also is rumored to release May 23rd, followed in one week by the GTX 770 which is a rehash of the GTX 680 design.

http://videocardz.com/41239/nvidia-geforce-gtx-780-to-be-released-on-may-23rd

http://www.fudzilla.com/home/item/31265-geforce-gtx-780-coming-on-may-23rd

http://www.sweclockers.com/nyhet/16955-geforce-gtx-780-for-1080p-120-hz-gaming

GTX 780 also is rumored to release May 23rd, followed in one week by the GTX 770 which is a rehash of the GTX 680 design.

http://videocardz.com/41239/nvidia-geforce-gtx-780-to-be-released-on-may-23rd

http://www.fudzilla.com/home/item/31265-geforce-gtx-780-coming-on-may-23rd

http://www.sweclockers.com/nyhet/16955-geforce-gtx-780-for-1080p-120-hz-gaming

Friday, May 3, 2013

i7 4770k at 7 GHz? Look out Sandy Bridge, Haswell might be aiming to be the overclocking enthusiasts replacement.

Not just the frequency, check out that voltage! Ouch! Don't try that at home kids.

Source: http://wccftech.com/intel-core-i7-4770k-overclocked-7-ghz-z87-motherboards-including-asus-maximus-vi-extreme-spotted/

In other Haswell overclocking news, the i5 4670K hit 6.2 GHz at an amazing 1.256v!

Source: http://www.overclock.net/t/1387728/ocaholic-wccf-intel-core-i7-4770k-overclocked-to-7-ghz-several-z87-motherboards-including-asus-maximus-vi-extreme-spotted/0_50#post_19881544

Source: http://www.overclock.net/t/1387728/ocaholic-wccf-intel-core-i7-4770k-overclocked-to-7-ghz-several-z87-motherboards-including-asus-maximus-vi-extreme-spotted/0_50#post_19881544

Source: http://wccftech.com/intel-core-i7-4770k-overclocked-7-ghz-z87-motherboards-including-asus-maximus-vi-extreme-spotted/

In other Haswell overclocking news, the i5 4670K hit 6.2 GHz at an amazing 1.256v!

Source: http://www.overclock.net/t/1387728/ocaholic-wccf-intel-core-i7-4770k-overclocked-to-7-ghz-several-z87-motherboards-including-asus-maximus-vi-extreme-spotted/0_50#post_19881544

Source: http://www.overclock.net/t/1387728/ocaholic-wccf-intel-core-i7-4770k-overclocked-to-7-ghz-several-z87-motherboards-including-asus-maximus-vi-extreme-spotted/0_50#post_19881544AMD Radeon HD 7990, CrossfireX, and Stutter? AMD may have a solution soon.

Unfortunately, every once in awhile, something will divide the community of the tech world and enthusiasts, much like the polarization of congress, this time it is AMD microstuttering. Most recently it has been the "confirmation" of microstutter, that has been ignored, much like Global Warming. Some AMD users have continually denied its existence despite AMD admitting to it, somewhat in a casual manner, and then showing its users they can fix it. When you remove the nVidia GPUs completely out of the equation, in my mind you have a slam dunk case of, this is not a fanboy issue, this is an AMD issue.

An example of AMD vs. AMD Fixed vs. nVidia. Ideally, you would want to be the green line (Geforce GTX Titan):

Jesus H. Jones. Look at that orange microstutter!

I am not going into the details of the full story, it literally causes me a headache, both the idiots and the actual microstutter. The point of this was that I wanted to leave a piece of good news. AMD has promised at least to give users an option to enable smoother frame rates (ala frame metering) to significantly increase image cohesion during transition. In other words, they remove the majority of runt frames which seems to make the screen stutter for fractions of a second, and has been noticeable since about 58xx generation of AMD Radeon HDs.

Anticipate, the drivers will be made public around June/July time frame. So.... September.

http://techreport.com/news/24748/amd-says-frame-pacing-beta-driver-due-in-june-july-timeframe

Here is a link to the site at the forefront of bringing a metric to observe the difference in AMD and nVidia single/multi GPU setups so that there is a "scientific" way to present to AMD and request it to be addressed.

http://www.pcper.com/

Specifically:

http://www.pcper.com/reviews/Graphics-Cards/AMD-Radeon-HD-7990-6GB-Review-Malta-Gets-Frame-Rated

http://www.pcper.com/reviews/Graphics-Cards/Frame-Rating-AMD-Improves-CrossFire-Prototype-Driver

And for you numb nuts saying that nVidia GPUs are no different, or cheating because they have "hardware" to enable frame metering, or whatever retarded excuse you have, here is AMD showing you they can fix it on AMD cards! To me the difference is day and night on an AMD setup vs. nVidia setup.

An example of AMD vs. AMD Fixed vs. nVidia. Ideally, you would want to be the green line (Geforce GTX Titan):

Jesus H. Jones. Look at that orange microstutter!

I am not going into the details of the full story, it literally causes me a headache, both the idiots and the actual microstutter. The point of this was that I wanted to leave a piece of good news. AMD has promised at least to give users an option to enable smoother frame rates (ala frame metering) to significantly increase image cohesion during transition. In other words, they remove the majority of runt frames which seems to make the screen stutter for fractions of a second, and has been noticeable since about 58xx generation of AMD Radeon HDs.

Anticipate, the drivers will be made public around June/July time frame. So.... September.

http://techreport.com/news/24748/amd-says-frame-pacing-beta-driver-due-in-june-july-timeframe

Here is a link to the site at the forefront of bringing a metric to observe the difference in AMD and nVidia single/multi GPU setups so that there is a "scientific" way to present to AMD and request it to be addressed.

http://www.pcper.com/

Specifically:

http://www.pcper.com/reviews/Graphics-Cards/AMD-Radeon-HD-7990-6GB-Review-Malta-Gets-Frame-Rated

http://www.pcper.com/reviews/Graphics-Cards/Frame-Rating-AMD-Improves-CrossFire-Prototype-Driver

And for you numb nuts saying that nVidia GPUs are no different, or cheating because they have "hardware" to enable frame metering, or whatever retarded excuse you have, here is AMD showing you they can fix it on AMD cards! To me the difference is day and night on an AMD setup vs. nVidia setup.

Geforce GTX 780/7xx & Radeon HD 8970 and DirectX 12? That is what this leak is showing.

These screenshots are not guaranteed and are pure unverified at this point. Especially with the level Photoshopping skills on the internet (ala 4chan and reddit.) We have also had to note, there were no plans with DX12, possibly in the wild (ala Windows Blue this September), these two GPU vendors would most assuredly know better than us.

No leak yet on launch date with AMDs cards, but the rumors for nVidia have been estimated this month, some as early as May 21st.

Original sources of information:

http://videocardz.com/41250/nvidia-geforce-gtx-780-and-amd-radeon-hd-8970-spotted-cpuz-database

http://www.ocaholic.ch/modules/news/article.php?storyid=6786

Update: Upon further investigation myself, I really believe this display of DX12 makes no sense, and should be considered at best a potential hiccup of CPU-z. In fact, the leak of the HD8970 with only DX11.0 seems odd, as I would have predicted both cards taking advantage of the Win8 release of DX11.1.

Although I haven't seen the white paper of DX11.1, it is possible that the DX11.1 is a versioning only on the software side. If that was the case then all cards retroactively could support it if it is infact DX11.0 branding.

No leak yet on launch date with AMDs cards, but the rumors for nVidia have been estimated this month, some as early as May 21st.

Original sources of information:

http://videocardz.com/41250/nvidia-geforce-gtx-780-and-amd-radeon-hd-8970-spotted-cpuz-database

http://www.ocaholic.ch/modules/news/article.php?storyid=6786

Update: Upon further investigation myself, I really believe this display of DX12 makes no sense, and should be considered at best a potential hiccup of CPU-z. In fact, the leak of the HD8970 with only DX11.0 seems odd, as I would have predicted both cards taking advantage of the Win8 release of DX11.1.

Although I haven't seen the white paper of DX11.1, it is possible that the DX11.1 is a versioning only on the software side. If that was the case then all cards retroactively could support it if it is infact DX11.0 branding.

Ready to Overclock your Radeon HD 7950 / 7970 but the tools don't seem to be working? I got this.

Getting started was a little bit tricky, coming in late to the 7970 game around April 2012 instead of February 2012. This little write up shows, using just Afterburner and a couple of DLLs, how to get into overclocking the 79xxs, and removing some of the limits imposed by the drivers/registry.

There are a few ways to go about doing this, one or two patches floating around etc, but this is just the what I used to get things rolling.

Software I Used:

Using the latest Afterburner 2.2.0 Beta 15, 12.4 Beta Catalyst, 12.3 CAP1. Works up to the modern drivers (I verified).

Best Way To Start:

Clean uninstall using AtiMan. Then Install of your choice of drivers and CAP profile.

Downloaded the missing atipdl64 / atipdlxx.dll. Link & Source: http://forums.guru3d.com/showthread.php?t=359671

Place the .dlls in the MSI Afterburner folder.

Note: You can also place them in their corresponding System32 & SysWow64 folders, but its not necessary. It works here.

Launch Afterburner, edited these settings below, some users report this is not necessary, but for me to have Voltage control it was. Try it without, and if you still don't have access, try enabling low-level access as I had to.

Important, follow these instructions exactly:

Edit Afterburner's shortcut and change the Target line: "C:\Program Files (x86)\MSI Afterburner\MSIAfterburner.exe" /XCL

Start Afterburner one time (it may pop up with a window asking you to reboot) and it will not start.

Go back in, delete the /XCL part of the target line.

Reboot machine now, and then re-launch Afterburner.

End Result:

Conflicting Clock Settings / BSODs:

If you have enabled the Catalyst's own Overclocking menus, be careful changing anything as there have been reports of some users BSOD with Afterburner or other Utilities running. Even 2.2 Beta 15 does have a few glitches, its not 100% perfect.

BSOD While Idle:

CFX users may want to (probably have to) disable ULPS to prevent random BSOD while idling or starting games. Atypical stop codes of 37, 7E and even 116 have been seen without disabling Ultra-Low Power State / ZeroCore modes. Simply open up Registry using Regedit, and search for multiple instances of "enableulps" and switch it from 1 to 0. I am told the enableulps_NA doesn't matter but I just change them all to 0. There are about 8 cases of it in registry, not all of them are 1, but just get them all changed and don't forget to reboot for it to take affect.

Power Draw Limit:

You can change PDL in Afterburner, by clicking on Settings. Once in settings you can see on the main tab towards the bottom and see PDL is there. To make changes you must slide it to where you want, click Okay, and ONCE you are looking back at the main tuning of AB you will see that the Apply button has lit up. You must click APPLY to apply the changes of PDL you just made, they are not effective till you do.

Memory OC:

We are getting a little bit of evidence showing that the memory clocks up to 350~400 MHz overclocks are achievable, some even higher, and they are equating to gains of 5% to 10% gains in performance (average FPS.) Now any higher I am sure it will either crash, or ECC will begin correcting so frequently that performance is lost. Find a sweet spot, that requires no extra voltage and if you can, keep an eye on memory temps. I noticed that the thermal padding that XFX used is relatively thick (>2mm) and may trap more heat in then transfers to the heat sink.

GPU OC:

Pretty easy to overclock once the controls are back in our hands. Higher clocks = more performance. One small catch, a lot of users on air have noticed that as temps rise, stability declines. Temperatures we consider normal for a GPU (70~85c) range. Consider this when overclocking, stability may require additional cooling and what not. I am currently on water and I am 100% limited by the silicone, not temperature, so I have not personally witnessed this.

ASUS OC BIOS:

There is an ASUS OC BIOS, which unlocks the voltage to 1.400v. The only OC tool that can use it, I believe, is the ASUS GPU-TWEAK, the latest version at the moment is 2.0.83, and it is really buggy for me (CrossfireX) when it comes to synchronizing both Cards. Switching back and forth between both GPUs, causes me to lose my max voltage and forces me to be stuck at 1125v. It also doesn't apply overclocks to the second GPU unless I manually do it, causing me to lose the voltage. I recommend not using this at the moment if you are benching multiple cards.

You can get it here:

Asus.HD7970.3072.120104.zip41k .zip file

ASUS GPU-z Tweak: http://rog.asus.com/forum/showthread.php?2624-GPU-Tweak-(Beta)

You will also need the latest ATi Winflash: http://www.techpowerup.com/downloads/2107/ATI_Winflash_2.0.1.18.html

Make sure to get a backup of the original ROM before continuing! You can simply do this by grabbing the VBIOS with GPU-z. DON'T FORGET!

Make sure you are running as Admin when you try, if it fails try the command to force flash if vendor-id warning appears:

atiwinflash -f -p 0 ASUSOC.bin

Multicards should be:

atiwinflash -f -p 0 ASUSOC.bin

atiwinflash -f -p 1 ASUSOC.bin

atiwinflash -f -p 2 ASUSOC.bin etc.

WARNING: Flashing is risky, no one, including myself, take responsibility for whatever you do to your cards. That applies to all the aforementioned overclocking as well.

Voltage Fluctuation:

It doesn't hurt to keep an eye on voltages from a few utilities, but the only way to be 100% sure what voltage you are seeing is with a voltmeter. Many users have reported spiking in voltage sensors, in Afterburner, GPU-z, and HWinfo32. HW Monitor and AIDA 64 also provide voltage readings to I believe. Use your best judgement in reading the sensors, and trust your gut. I usually play it safe, but I am also on water, so I have more wiggle room regarding voltage fluctuations. Being careful is the resounding message I am trying to push across.

One Final Thing:

Different Benchmarks and Games can and probably will have different Max overclocks. Don't be disappointed if your Max overclock crashes in BF3 but nothing else, be flexible with the overclocks. Don't forget, just because a game crashes, does not prove you or your overclocks are fully at fault. Could be nothing more than a game bug or more likely a driver issue. Keep chugging a way and have fun!

Other Resources:

I am RagingCain at Overclock.net ^.^

Right click context menu missing on the Windows 7/Vista/XP Start-Menu? Try this!

Really simple fix, its just a setting change internal to the Start Menu, and yes it CAN get turned on itself as I discovered amazingly one Saturday morn!

A lot of suggestions said do a system restore, scan the hard-disk, but this is where you should go to first before deciding Windows has packed in and needs a reformat/repair.

Problem / Symptoms:

Unable to Right Click icons in the Start-Menu.

Unable to Drag/Drop icons in the Start-Menu.

Unable to perform all secondary actions associated with the previous two, such as pinning or deleting.

Cause:

User/System changed settings on Start-Menu Properties.

How To Fix:

Right-Click the Start-Menu button or ORB if you have Aero Basic/Aero running, and select Properties.

Go to Customize... Button on the Start Menu tab, it should take you to this tab automatically.

Once open, scroll down about halfway, and re-enable Context Menus and Dragging and Dropping. IF it is already enabled but still not working, try disabling, apply, close this all out, and go all the way back in and re-enable it to confirm it changed.

If yours is in fact already enabled yet the context menu is still not working, then I would take heed and investigate further. It may be signs of much larger problems overall, such as scareware, malware, virii, adware, Grumpy Cat, bad Windows etc.

When it doubt, scan for it all and "sfc /scannow" in CMD prompt!

Subscribe to:

Posts (Atom)